Over the last decade, compute has grown 1,200%. And, as computing has grown, so have data centers. There are more than 700 hyperscale centers –10,000 square-foot structures with 5,000 or more servers – in the U.S. generating 100MW of capacity each.

And it’s not enough.

The demand for compute is doubling every two months, making the 36-month build of a new center out of step with the speed of the market.

Which makes the Distributed Model a much more attractive approach. Simply put, distribution is a model where data processing and storage is spread across multiple geographically dispersed locations so the capacity is closer to the user. This contrasts to having a single large data center servicing entire regions or areas.

Not only does a Distributed approach improve reliability, scalability and performance, it can be quicker and more cost effective to deploy. And with half of all the world’s hyperscale centers located in America, distribution can solve many of the data sovereignty regulations that are starting to come into play.

A More Reliable Design

The Distributed Model is more reliable than concentrated power because you are not putting all of your eggs into a single basket. Each data center can operate autonomously while still being interconnected through high-speed networks enabling seamless data exchange. Rarely will a single event knock out all of the data centers within a network allowing workloads to be redistributed until all systems are brought back online.

A More Scalable Design

Building a hyperscale data center presents some serious challenges. Not only do they require multiple acres of land, but they have huge power and water requirements. To put this into perspective, a 100 MW data center uses the same amount of power as 80,000 homes in the US. The utility infrastructure for a data center of that size is significant and can take time to plan and buildout, meaning that large data centers can’t be built quickly. In addition, the average 100 MW data center will use up to 1.1 million gallons of water a day, which has a significant impact on local water supplies.

Such infrastructure requirements add a significant amount of complexity to ensure that regulatory compliance standards are met during planning and construction. In addition, larger data centers are often built with future expansion in mind, meaning that not all of the acreage is in use at a given moment. Comparatively, smaller 4-5 MW data centers are going to be easier and faster to build. They require less space, will have much smaller power needs, won’t use as much water, and will have less compliance issues, while still being fully capable of servicing a given region. Obviously, more data centers will be required in the distributed model, but they can be built quicker, in a much more targeted fashion, and reduce capital expenditures as they are built on demand.

A Better Performing Design

We are becoming more and more reliant on our applications, and many of those applications are requiring real-time interactions. Whether an application is processing information or accessing stored data, the further away from the data center, the longer the interactions are going to take. In the distributed model, users are closer to the source, reducing the latency of the interaction and allowing for real-time access to real-time data.

Data sovereignty laws are putting restrictions on how certain data is handled, with the idea of protecting both citizen and government private and sensitive data, meaning that a lot of data can not cross certain physical borders. (HIPPA and banking being two examples.) This means that more and more data is going to have to be processed and stored locally, defining yet another way the Distributed Data Center Model will shine, as regional data centers will be able to manage the data within its physically assigned borders.

The Distributed Model for data center deployment is nothing new, but in the past a 4-5 MW data center was not that appealing because they weren’t very efficient from a power and water perspective. Companies gravitated to larger data center operations because they offered an economy of scale that delivered Power Utilization Effectiveness (PUE) and Water Utilization Effectiveness (WUE) that were far superior to that of smaller data centers.

A Better Paradigm

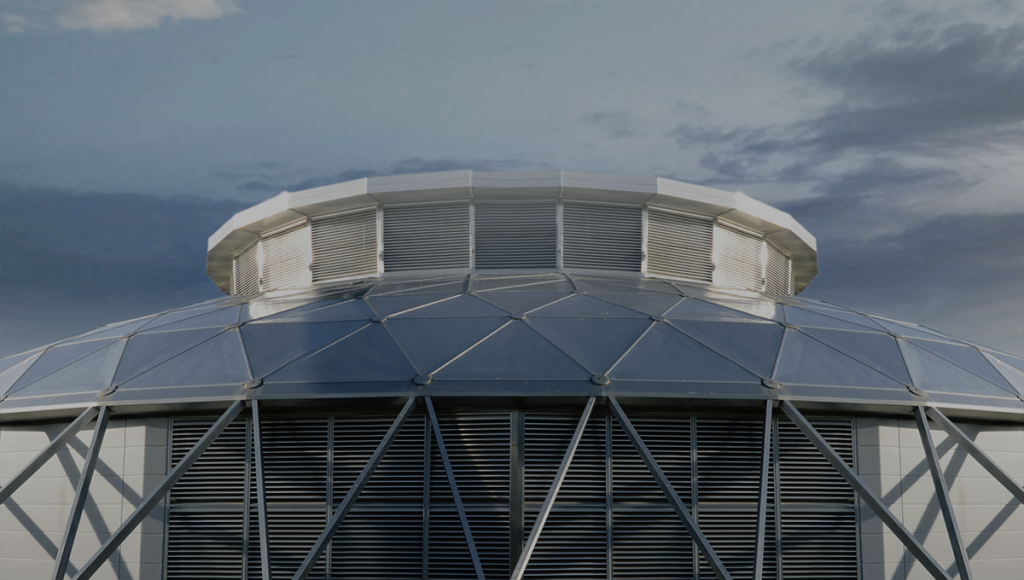

ServerDomes is causing a paradigm shift in that thinking. The hyper-efficient design of these 5 MW data centers not only have a better PUE and WUE than their counterparts, but are also more efficient than most, if not all, hyperscale data centers.

The ServerDome data center is opening up new opportunities for a more agile, sustainable and robust network to meet demand in the Age of Machine Learning.

Sources:

- https://www.statista.com/statistics/871513/worldwide-data-created

- https://www.statista.com/topics/10667/data-centers-in-the-us/#statisticChapter

- https://www.datacenterdynamics.com/en/opinions/an-industry-in-transition-1-data-center-water-use/